Projects

Table of Contents

- Fracture Characterisation and Geometry for Motion Planning

- Fracture Detection and Localisation

- Bilateral Teleoperation Franka Panda and Geomagic

- Hololyo

- Nao Interface

- The Little Knight

First Order Motion Model for Image Animation Real-Time Implementation

An example of a real-time application I have developed built on top of the groundbreaking work of Aliaksandr Siarohin et al.. The original first-order model allowed for the generation of highly realistic animations using offline videos.

In this updated version, I have developed the code to run the model in real-time using the stream from a webcam, without retraining the model. While this is just a first test of the application, there is still a lot of room for improvement and to continue exploring the capabilities of this technology.

Create Enemy Behaviour with C# in Unity

Developed enemy behavior tree following the Coursera course Create enemy behaviour C# Unity. Learned how to make enemy characters patrol an area, chase a player in range and attack. Learned how to build a behavior tree expanding the following Unity concepts:

- Transform-based movement

- Rigidbody collisions and triggers

- Interfaces

- Events

Fracture Characterisation and Geometry for Motion Planning

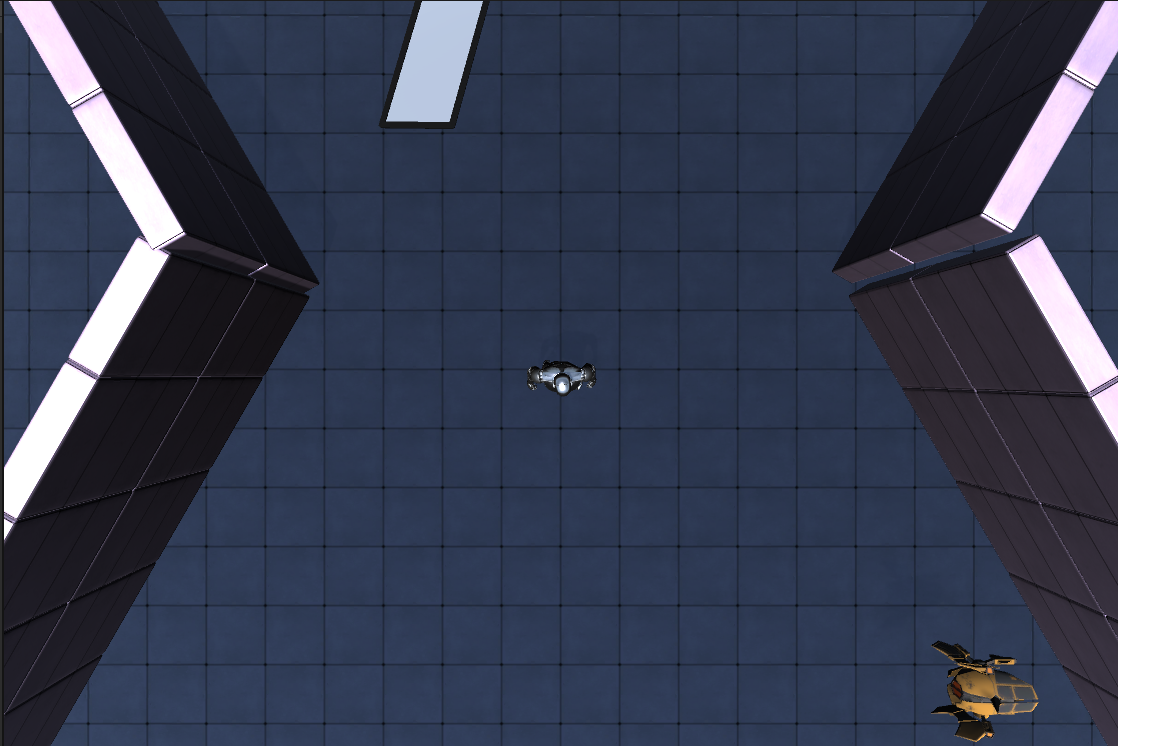

We analyse images of cracks to extract the geometry information and to plan an optimal control tactile exploration via image processing and computer vision techniques to create a skeletonised version of the image of the fracture which is then transformed into a graph. Middle points (red points) of the edges of the previously preliminary graph are extracted and an updated graph made up of these middle points is created. Each of this middle point is connected to the rest of the points via Euclidean distance. The distance is used to create the weighted edges of the graph. The steps are shown in the image below:

Using these coordinates and weights, minimumn spanning tree is used to create the motion planner for a robotic manipulator is defined.

Fracture Detection and Localisation

Developed an innovative approach involving vision and tactile sensing to detect and characterise surface cracks. The proposed algorithm localises surface cracks in a remote environment through videos/photos taken by an on-board robot camera, which is then followed by automatic tactile inspection of the surfaces. Faster R-CNN deep learning-based object detection is used for identifying the location of potential cracks. Random forest classifier is used for tactile identification of the cracks to confirm their presences. The algorithm is able to localise and explore fractures both offline and in realtime. When using both modalities cooperatively, the model is able to correctly detect 92.85% of the cracks while it decreases to 46.66% when using only vision information. Exploring a surface using only tactile requires around 199 seconds. This time is reduced to 31 seconds when using both vision and tactile together. This approach may be implemented also in extreme environments (e.g. in nuclear plants) since gamma radiation does not interfere with the basic sensing mechanism of fibre optic-based sensors.

For more information, refer to the related papers 1, 2

Bilateral Teleoperation Franka Panda and Geomagic

Developed the master side in C++ of a bilateral teleoperation system between Geomagic Touch as the master device and a Franka Emika’s Panda as the slave robot. The system consists of a hybrid position and rate control modes with interoperability protocol.

For more information, refer to the paper

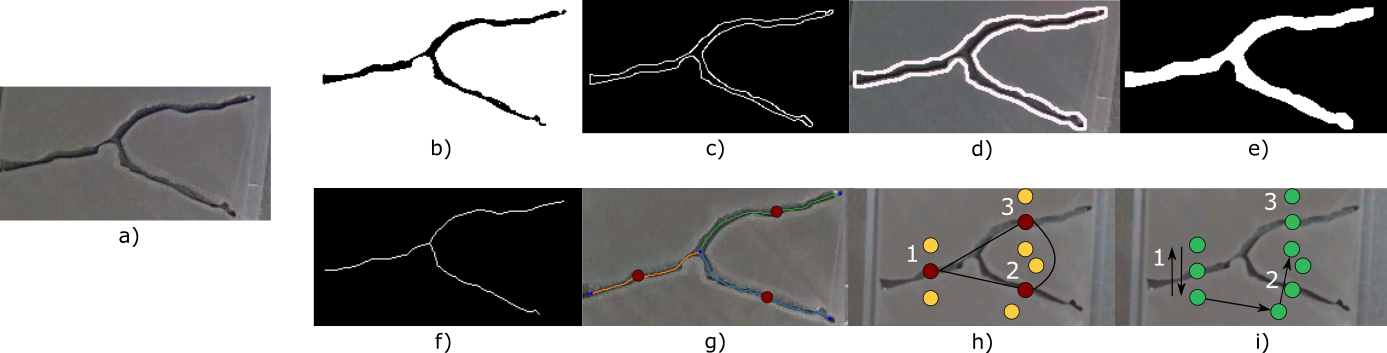

Hololyo

Portable augmented reality application to provide visual feedback to amputees during surface electromyography data acquisitions. Developed in Unity to combines the Microsoft HoloLens and the Thalmic labs Myo. In the augmented environment, rendered by the HoloLens, the user can control a virtual hand with surface electromyography. By using the virtual hand, the user can move objects in augmented reality and train to activate the right muscles for each movement through visual feedback.

For more information, refer to the paper

Nao Interface

Mobile application for Android which allows the user to send vocal commands to a Nao Robot, through a client-server connection.

The project is created with two different applications which collaborates with each other:

- An Android app which sends vocal human commands in real-time to a Choreographe project;

- A Coreographe interface which transmits the the received message to the NAO robot.

The NAO is able to recognize a set of words in any sentence and executes multiple commands at the same time.

The Little Knight

The project is based on WebGL library that provides a graphic 3D API to browsers, allowing the creation of 3D scenes through Javascript, working with the Canvas element of HTML5. Two additional libraries are implemented:

- Three.js, open source library based on WebGL, that provides a number of functions and structures that simplify the creation of complex objects and scenes, altogether leaving the programmer free to personalize the scene at his own.

- Physi.js (website), another open source library that handles Physic simulation through Three.js function.

The project developed is a third person game in which the player impersonates a character named Knight. The goal of the game is to reach the dragon on the other side of the cave, avoiding the lava’s river. In case of victory, the game stops and a final victory screen will appear. Otherwise, if the character falls in the lava, the losing screen will appear.